Every brand carries a principle at its core. For this project, that principle was observability — the ability to scan, reveal, and stay aware based on data.

The dunes became our metaphor for this: surfaces that shift, carry patterns, and extend beyond the frame.

We began with static studies in GPT Sora, exploring how grayscale dunes could carry rhythm and continuity. These previews were part of a theory we decided to test in practice. Using Cavalry, we could manipulate the dot grid, but it needed “food” — so we started feeding it with new tools.

Building the device

To make the metaphor breathe, we introduced a device — camera movement. From there, we orchestrated motion in Weavy.

Runway Gen-3 created the base movement, Runway Gen-4 gave us looping sequences, and it honestly took a few hours of prompting to spell the exact kind of motion we wanted. When Kling 2.1 was released a week later, the system's scalability expanded instantly.

Weavy workflowBy letting the camera travel through the dunes, the landscapes no longer sat still and became environments under observation. The real shift happened once we brought the dunes into Cavalry and transformed them into a system of dots.

Here, three simple inputs gave us full control:

dot size and grid edit

colour customisation

background colour edit

Using the duplicator attributes, we treated dunes as grids of dots that could be scaled row by row and column by column, a way to adjust how much information the surface revealed at once.

With the color blend attribute, we set two colours and use a gradient to control the transition between them. The contrast from the source video drives the mapping: the darkest zones translate into a deep green, while lighter areas transition into softer tones from the palette. This way, the dunes respond directly to moving input instead of staying fixed.

Finally, the background. A simple rectangular shape sits on the bottom layer, sized to fit the entire composition. From the attributes panel, the fill can be adjusted to any colour. It’s a small step, but it makes the system flexible enough to adapt to different contexts and brand environments.

From system to mockups

Once the system was stable in Cavalry, we moved to testing it in context. For the product itself, we created a looped background animation.

Alongside the visual language, we also built a system inside Weavy for creating mockups that are always on brand.

Instead of designing mockups one by one, the flow allows us to generate, recolor, and adapt scenes automatically while maintaining complete consistency with the identity. Refinement came through Google Imagen 4, Flux Kontext, Topaz, Magnific, Bria, and animation in Seedance.

Weavy workflow

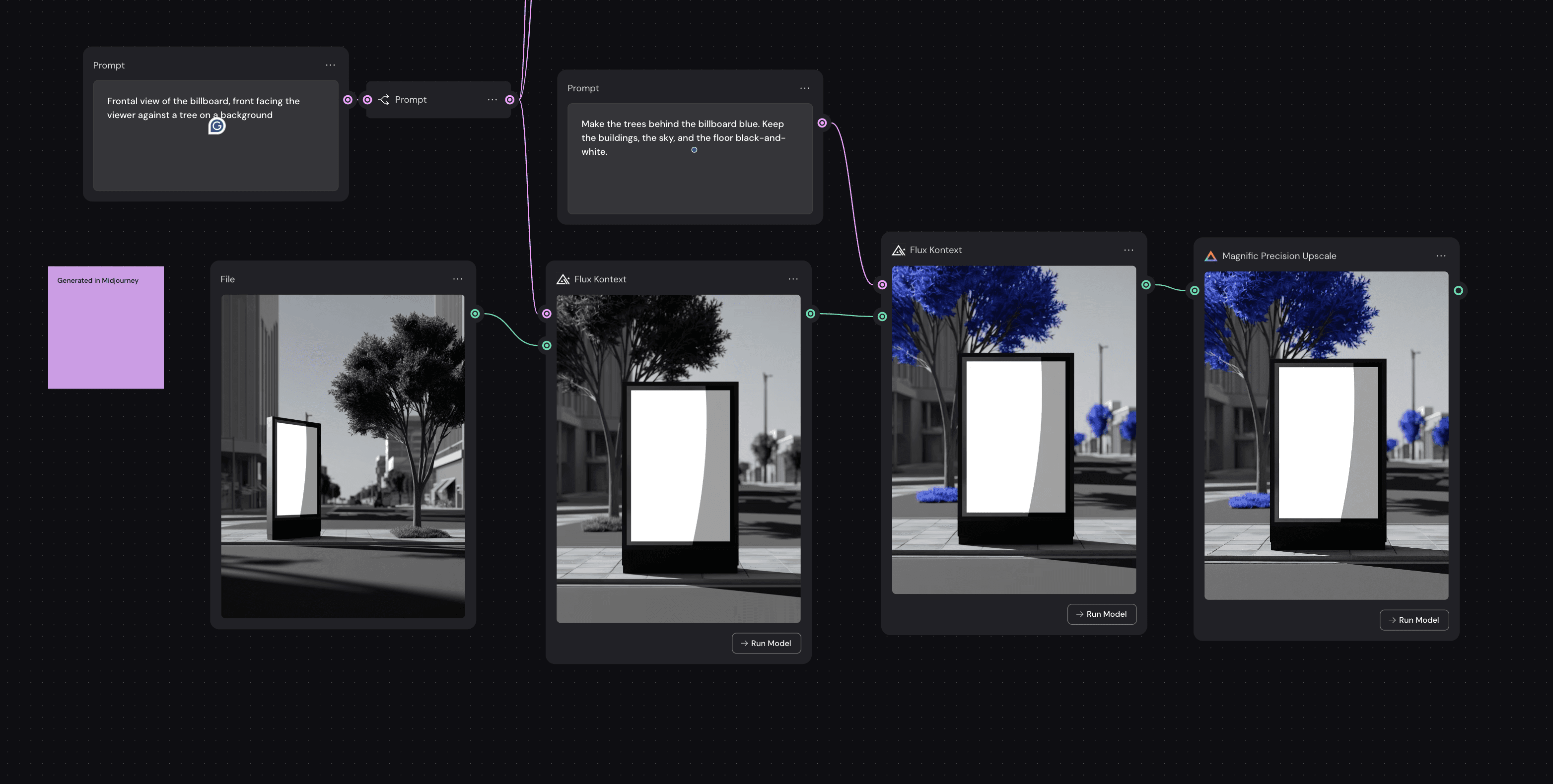

This partial flow gives a glimpse of that process:

We began with a base render (generated in MidJourney).

With Flux Kontext, we switched the angle and isolated elements — turning the trees behind the billboard into bright blue while keeping buildings, sky, and floor in grayscale.

Using Magnific Precision Upscale, we refined details so the output felt production-ready.

AI Models used:

Google imagen 4

Flux Kontext

Topaz Upscale & Topaz Sharpen

Magnific Precision Upscale

Bria Content-aware Fill

Midjourney with personalization code --p kp7a36z

Seedance V1.0

The result was on-brand, animated, horizontal, and vertical mockups that matched the same colour concept as the system itself.

What stayed with us most were the moments of discovery: testing prompts for hours, watching a camera path click into place with seamless loop, or seeing a grid of dots behave like a system. Building with these tools feels like learning a new instrument, awkward at first, but increasingly expressive once the rhythm emerges. This project gave us space to explore, to try, to learn by doing, and that sense of play is what keeps the work alive for us.

Credits:

Anastasiia Bezhevets — Gen AI Artist, Lead PM

Ariana Montero — Visual Designer

Julian Bauer — Co-Founder, Creative Director